Overview

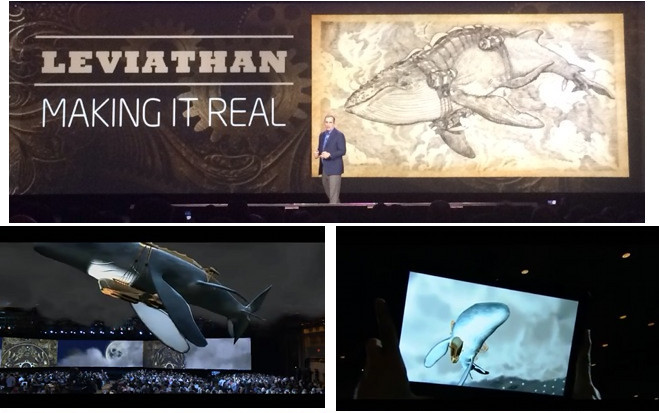

At CES 2014, Intel showcased three Augmented Reality demonstrations, based upon the world of Leviathan. One demo was part of the opening Keynote presentation given by Brian Krzanich, CEO of Intel (it starts around the 49:30 mark, or you can see a video of just our part in the Keynote section below). The other two (Spotlight and Frame) ran in the Intel booth during CES. I describe each demo in more detail in the next three sections below.

Intel created these demonstrations to excite people about the potential for Augmented Reality to enable new forms of media for storytelling, and to send a message that Intel intends to enable innovation in this space. These were high risk, high visibility demonstrations but they were very successful and were a key part of Intel's message that we champion immersive experiences and innovation.

Our demos were inspired by the steampunk fantasy world of Leviathan in which genetic engineering was discovered very early, so in some countries a biological revolution supplanted the industrial revolution. People fabricated new types of living creatures to serve the roles of dirigibles, cars and other vehicles. The Leviathan itself is a gigantic airship that looks like a flying whale. We are fortunate that the author, Scott Westerfeld, allows us to use his content as a rich world to inspire and explore new forms of media and storytelling.

Tawny Schlieski champions the Leviathan project within Intel Labs, and she recruited me and my team to help with the technical execution. We partner with the World Building Media Lab in the USC School of Cinematic Arts. The director of the World Building Media Lab is Alex McDowell, who advocates immersive design and has been a designer on many films including Minority Report and Man of Steel.

I wrote the tracker that provided the AR tablet view of Leviathan in the Keynote, and I personally operated the tablet during the Keynote. My team built the AR application framework that ran on the tablet. I was the main Intel technical expert advising the development and execution of the three Leviathan demos, and I manned the Intel booth to help run the two booth demos.

You can access a press kit at this link.

1) Keynote

The theme of Brian Krzanich’s opening CES 2014 keynote was “immersive experience.” And we provided the biggest example of that by using Augmented Reality techniques to fly a whale above the heads of a live audience of 2,500 people who weren’t expecting that to happen.

You can watch the embedded video below to see our part on the keynote, or you can download an HD version of the Keynote footage (MP4 format, 64 MB)

We received the loudest applause of any segment in the keynote. Making a virtual creature appear via Augmented Reality is hardly new, but the scale of this effort, combined with the beautifully rendered content, music that was specifically composed for this event, and an audience that was not expecting something like this, all made it a compelling experience.

People in the audience saw the augmentations only when they looked at the big displays up in front, or if they looked at the tablet I held up in the audience. They could not see the whale when they looked up toward the roof of the auditorium. This is analagous to watching an American football game in a stadium. The people in the stadium cannot see the yellow first-down line augmentation by looking directly at the field. They can only see it by looking at the big display in the stadium, or by watching at home on TV. The next image gives you an idea of what the live audience saw on the big screens.

Building this experience for the keynote was a challenging and risky project, for two reasons. First, we had only a few weeks of preparation time and limited access to the auditorium. This auditorium was shared with two other CES keynotes, so we were restricted in the hours we could access the space. Second, we had to make this work with the auditorium as it was, without being able to modify it to help with tracking the tablet. See the image below to get an idea of what the auditorium looked like during the keynote: dark, with large dynamic areas (the crowd and the big screens). This environment is very different from what most Augmented Reality demos operate in.

Despite the challenges, the experience worked nearly perfectly, as you can see from the video. I proposed and built a tracking solution for the tablet that worked well enough to make this experience work.

2) Spotlight

As Brian Krzanich stated in his keynote speech, CES attendees could also experience Leviathan in the Intel booth. The main demonstration ran in our Spotlight stage. We ran a total of nine performances during CES. This was a more personal version of the Keynote demonstration, where participants could both see the whale on the big screen and also see it from their own vantage points. We distributed 20 convertible Ultrabooks (devices that can operate both as a tablet and a traditional laptop) to the audience before each presentation, and the Ultrabooks provided AR views of the whale and enabled them to interact with baby Huxley creatures (small personal airship creatures).

The following video shows one Spotlight performance, showing all parts of the experience:

The next video shows the view of the whale leaving the screen and flying around the stage, from the perspective of one Ultrabook:

The next video shows the view of the whale returning to the screen, from the perspective of one Ultrabook:

Metaio built a custom tracker to track the Ultrabooks in the Spotlight demo. If you watch the videos, you will notice artifacts where the tracking is noisy and exhibits large jumps. But what you should realize is that it was a major accomplishment to get any tracking working in this environment. Take a look at the picture below to see what the Spotlight environment looked like:

The Intel booth design has a very clean, uncluttered look. This may look attractive but it is not conducive at all to supporting AR tracking. There are few visual features suitable for tracking. The lighting is dim. We were not allowed to modify the booth geometry, and we were permitted to make only a few changes in displays and lighting to help the tracking. And since this was CES, our booth was always packed with hundreds of people, adding many dynamic elements to the scene.

Worst of all, the booth was an ephemeral site. It was not completely built until a day before the doors opened, and it operated for only four days. We could not take an approach of installing an active tracking system to work in this environment: there wasn't enough time, and the booth wouldn’t exist long enough to make that worthwhile. Therefore the tracking had to work solely based on the sensors in our Ultrabooks. Because the booth was under construction almost until the doors opened, we had little time to train, test, and refine both the tracker and the applications that ran in this space. It was a very difficult situation, which made this demo an ambitious and risky project.

This is very different from typical AR demos that track off of markers or flat images that were designed to support tracking, or research systems that demonstrate computer vision tracking in environments with lots of good features to track, under good lighting.

So keep all this in mind when you watch the videos and judge the AR experience. I think it is impressive it worked as well as it did. Our implementation was sufficient to convey the potential of merging compelling virtual storytelling content into the real environment, and enabling people to interact with that content.

3) Frame

The other Leviathan demo in the Intel booth occurred at the Frame, and this demo ran continuously during all hours that the Intel booth was open. The Frame was a 3D sculpture showing how convertible Ultrabooks work. Below are two photos of the Frame sculpture:

The experience at the Frame focused on baby Huxley creatures. We showed them circling around the Frame via Augmented Reality. When a visitor touched one of them, it would fly up to the screen and then then you could interact with the Huxley in a variety of ways, such as spinning it around or grabbing it by one of its tentacles. You can see video of such interactions in the video of the Spotlight demo (previous section).

The photo below shows the Huxley creatures flying around the frame:

The next photo is an example of interacting with a Huxley:

Here is a photo of our table by the Frame where we put the equipment when visitors were not walking around the Frame with the tablets:

Metaio built a custom tracker to provide the tracking around the Frame. This was a difficult challenge for AR tracking. Unlike the flat images or markers that most AR experiences use for tracking, the Frame is a complex 3D object with dynamic elements. The images displayed on the Ultrabooks mounted on the Frame changed over time. CES is a crowded trade show, so we always had people walking in front of or behind the Frame. The lights and displays in the background also changed constantly. Finally, the lighting within the Intel booth itself dynamically changed, so that the illumination on the Frame sculpture was not constant.

On top of that, the Frame object itself was not completely built until the day before the doors opened for CES. So there was little time to train and tune the tracker and application.

Despite all these difficulties, the tracking was surprisingly robust and people could walk around the Frame and see the Huxley augmentations from many different viewpoints. Below is a video of a person walking around the Frame, rotating about 90 degrees around the Frame object. This demonstrates that the system tracked on this 3D object even with large changes in viewpoint:

Equipment and contractors

In the Keynote and Spotlight, we used Mac Minis with Intel Core i7 processors and Intel integrated graphics (Intel HD Graphics 4000) as servers and render engines providing the graphics seen on the big screens. In all three experiences, we used Lenovo Helix 2-in-1 Ultrabooks with Intel Core i7 processors and Intel integrated graphics. In the Keynote and Frame experiences, we used the Lenovos in tablet mode, where the display was removed from the base. In the Spotlight, we kept the display on the base for performance reasons. In all three experiences, everything was generated in real time. There were no prerendered graphics.

To make Leviathan a reality, Intel engaged the services of Metaio and a production company called Wondros. Metaio provided the tracking in the Spotlight and Frame demos, and Wondros built all the applications and ran the productions, with input from Intel Labs and the USC World Building Media Lab.

Related materials

I gave a talk about Leviathan at the AWE 2014 event. The Examiner wrote an article on AWE 2014 that described my talk on Leviathan. Two corrections on that article: Instead of an iPad, I used an Intel 2-in-1 in tablet mode, and I was not guiding the whale, but rather following the whale’s motions.

Below is a video that summarizes how Leviathan is a vehicle for exploring the future of storytelling. It includes interviews with Tawny Schlieski, Alex McDowell, Genevieve Bell and others, and features the work at the World Building Media Lab at USC:

Please follow this link for a 7 minute video interviewing people about the Leviathan project, including Scott Westerfeld.

Here is a link to another interview with Tawny Schlieski talking about Leviathan and storytelling. It is about 3 minutes long.

The USC World Media Building Lab won the Future Voice award at IXDA 2014, and you can see a video of Genevieve Bell talking about this award and Leviathan.

Finally, you should check out the last video below to see the impressive Scavenger Bot Augmented Reality demo. This was shown in the Intel keynote right before Leviathan. It features a depth sensor integrated into a tablet, enabling an AR application to add a virtual robot into a real environment with blocks and laundry. The robot can navigate through the environment and be correctly occluded behind real objects. When the real environment changes, the system can rescan the environment and adapt accordingly. I did not work on this; it was done by a different part of Intel. But it was another Intel AR demo given in the same event as Leviathan.