TL;DR (Executive Summary)

Most 3D displays are stereo displays, which force the viewer to accommodate (focus) to a fixed distance, causing eyestrain. This is a particular problem in optical see-through AR displays where the real world is seen with correct depth cues but the virtual content is not. Light field displays more accurately reproduce the beams of light emitted from real objects, enabling viewers to accommodate to different depths. Therefore light field displays can reduce or avoid a major source of eyestrain. Light field displays are often considered impractical because the brute force approach to implement them requires absurdly high display resolutions. We propose an approach to build practical light field displays based on tracking a single viewer's eye positions. We verified this approach by building prototypes with display resolutions available today or in the near future.

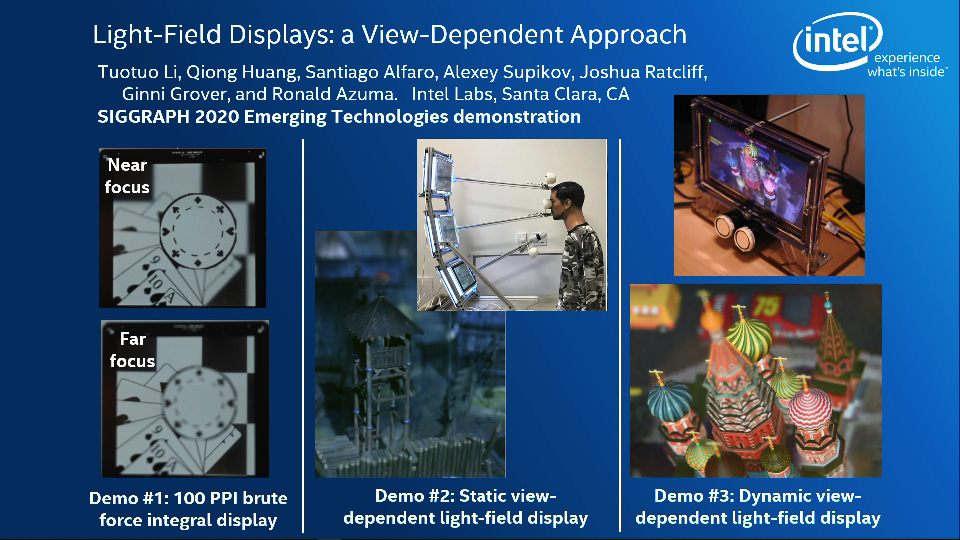

The following 3 minute video (with no audio) provides an overview of the approach and our three demos:

Details

To learn more details about this project, please watch the following 14 minute presentation from SIGGRAPH 2020:

Also, please read our Display Week 2020 paper:

Li, Tuotuo, Qiong Huang, Santiago Alfaro, Alexey Supikov, and Ronald Azuma. View-Dependent Light-Field Display that Supports Accommodation Using a Commercially-Available High Pixel Density LCD Panel. Display Week 2020 (San Jose, CA, 3-7 August 2020). PDF of the paper

Unfortunately, seeing videos of these displays is not nearly as compelling as viewing them in person. When you see these displays directly, you perceive a 3D scene, which you don't see in the 2D videos on this webpage. Furthermore, the viewer can focus to different depths simply by fixating upon different objects in the 3D scene, just as one would in real life. The experience more closely matches real life so these light field displays are more comfortable to view than a typical stereo display. I have stared at these displays for a long time and I do not feel the eyestrain I usually do with stereo displays. Also, one observer who could not see stereo was able to see 3D with these displays.

Our prototypes have significant limitations. We reduced Moiré patterns by rotating the lenslet array. Because we could not integrate a directional backlight, the viewing distances are constrained and it is very difficult to make the system so responsive that the viewer avoids seeing disturbing artifacts when moving his/her head left and right. The depth of field is limited to several inches, so it is not possible to depict a 3D scene where the depth range covers several feet or more. Diffraction limits the ultimate performance of this approach. Our prototypes provide spatial resolutions of 72 pixels per inch (PPI) and 100 PPI, which are lower than the “retinal display” resoulutions in some phones. However, if we increased the spatial resolution to 200 or 300 PPI, diffraction would limit our ability to provide the directional rays of light necessary to support accommodation.

Despite the limitations, we believe our work brings the field one step closer to 3D displays that support depth cues correctly, which is a crucial step in making 3D, VR and AR displays more widely accepted by consumers.

A group of people worked on this project. Tuotuo Li proposed the approach and did the bulk of the implementation. Qiong Huang designed and built the eye position tracking system. I served as the research manager of this project.